3D Motion Reconstruction for 4D Synthesis

3D Motion Reconstruction for 4D Synthesis

Synthesising high-quality 4D dynamic objects from single monocular video

Synthesising high-quality 4D dynamic objects from single monocular video

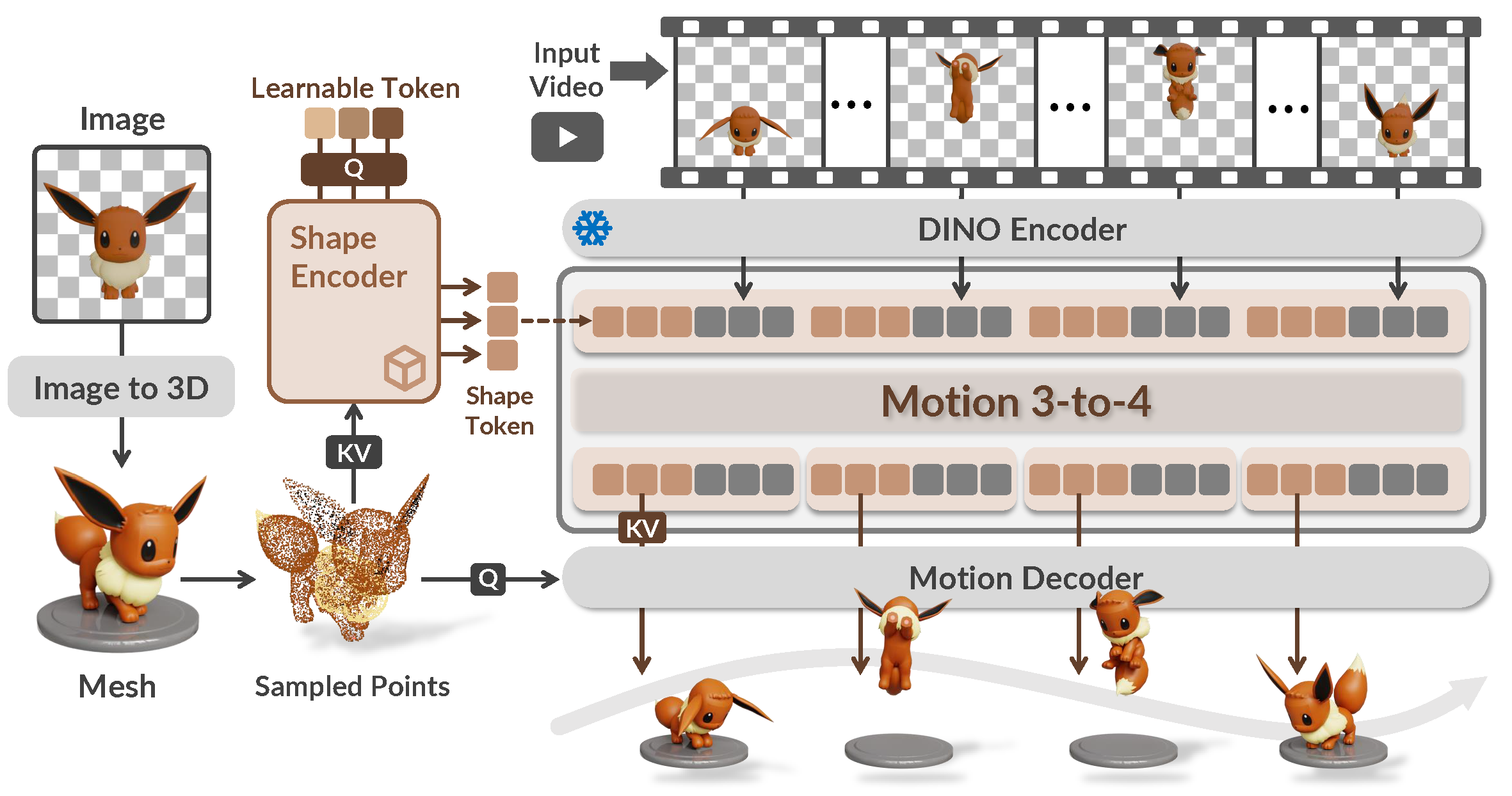

We present Motion 3-to-4, a feed-forward framework for synthesising high-quality 4D dynamic objects from a single monocular video and an optional 3D reference mesh. While recent advances have significantly improved 2D, video, and 3D content generation, 4D synthesis remains difficult due to limited training data and the inherent ambiguity of recovering geometry and motion from a monocular viewpoint.

Motion 3-to-4 addresses these challenges by decomposing 4D synthesis into static 3D shape generation and motion reconstruction. Using a canonical reference mesh, our model learns a compact motion latent representation and predicts per-frame vertex trajectories to recover complete, temporally coherent geometry. A scalable frame-wise transformer further enables robustness to varying sequence lengths. Evaluations on both standard benchmarks and a new dataset with accurate ground-truth geometry show that Motion 3-to-4 delivers superior fidelity and spatial consistency compared to prior work.

Our framework consists of two main components:

Comparing Motion 3-to-4 with baseline methods

← Drag or use arrows to navigate →

← Drag or use arrows to navigate →

← Drag or use arrows to navigate →

Driving static 3D assets with text prompts and generated videos

← Drag or use arrows to navigate →

We compare our method, Motion 3-to-4, with state-of-the-art 4D generation approaches, including:

@article{chen2026motion3to4,

title={Motion 3-to-4: 3D Motion Reconstruction for 4D Synthesis},

author={Hongyuan, Chen and Xingyu, Chen and Youjia Zhang, and Zexiang, Xu and Anpei, Chen},

journal={arXiv preprint arXiv:2601.14253},

year={2026}

}